SIGGRAPH 2006 Report

|

|

ADVERTISEMENT |

Overview

The 33rd Siggraph conference was kicked off in Boston the first week in August. On Monday, all attendees were welcomed by John Finnegan, the Conference Chair. John noted several highlights of how the conference has grown with 75 new companies added to the exhibition. The Siggraph 2006 committee also started a new Student Registration Donation Program that allowed attendees to donate funds that would help local students to attend the conference. This outreach program is a great example of how the industry is helping students. Also new this year were the Student Research Posters. The posters filled the front hall and remained on displayed throughout the conference. John then introduced several of the key conference chairs to speak about their respective areas.

Tom Craven, who is retired from Walt Disney, spoke about the Emerging Technologies venue, which had 18 countries represented covering everything from Haptics, Displays, Gaming and Sensors. There was even a team showing some technology to help the visually-impaired. New this year was a special area called the "Fusion Midway" that held all the displays that could be classified as Art or Technology. The image below shows the Morphovision display that transforms and animates solid 3D objects using a digital projector.

Image courtesy of Takashi Fukaya, NHK Science and Technical Research Laboratories and ACM Siggraph 2006

Julie Dorsey, the Papers Chair, mentioned that a record 474 papers were submitted of which 86 were selected. The selection were based on the merits of the research and 15 countries were represented. The variety of papers included studies in the areas of data capture, image synthesis and simulating physical-based phenomenon.

Terrance Mason chaired the Electronic and Animation Theaters. He announced that his committee received over 800 submissions from 20 different countries. Of these submissions, 97 were accepted or 12% making the selection very competitive. Terrance also spoke of the committee's outreach program to include 2D and mixed media within the submissions. They also instituted an electronic submission process, which enabled the committee to watch all submissions via computer instead of wading through a mountain of video tapes. This helped the committee to more quickly and objectively manage their selection process.

Image courtesy of Eric Bruneton and ACM Siggraph 2006

Of all the animation submissions, One Rat Short by Alex Weil, Charlex was named Best of Show and 458nm by Jan Bitzer, Ilija Brunck, and Tom Weber, Filmakademie Baden-Wuttemberg was named Special Jury Honors.

Opening the Electronic Theater, visitors were given a chance to interact with the World's Largest Etch-a-Sketch. Each attendee was given a wand with a red reflective marker on one side and a green reflective marker on the opposite side. These wands were detected from the audience and used to control the Etch-a-Sketch shown on the monitors at the front of the hall.

The Art Gallery was chaired by Bonnie Mitchell. It included 4 unique venues. The first venue was the Art Gallery, which featured 2D images, collages, fabrics, fiber art, procedural works, animations, and some select 4D installations that change over time. The second venue was Electronic Mediated Performances where 28 separate performances were scheduled throughout the conference. The third venue was the Charles Csuri Retrospective showing a diverse collection of graphics pioneer's Charles Csuri's works spanning his 43 years. The fourth venue was the Fusion Midway which featured works that combine art and technology for a highly interactive experience.

Image courtesy of Carlo Sequin, University of California, Berkeley and ACM Siggraph 2006

The conference keynote was delivered by Joe Rohde, Executive Designer and Vice President, Walt Disney Imagineering. His presentation entitled, From Myth to Mountain: Insights Into Virtual Placemaking," detailed how Joe and his team developed the new Expedition Everest thrill ride for Disney's Animal Kingdom. Rohde spoke of his wide travels doing research for the project that took him to diverse places such as Nepal, Tanzania and the Himalayas. He also spoke how his research was focused on conservation. He stated that "we are all involuntary storytellers," and that storytelling can be dissected into theme, design consistency, and research for realism. He then explained how each of these areas played an important part in the design of the attraction.

Three awards were presented at the conference. The Computer Graphics Achievement Award was given to Thomas W. Sederberg, Brigham Young University. The Significant New Researcher Award was presented to Takeo Igarashi, University of Tokyo, and the ACM Siggraph Outstanding Service Award was presented to John M. Fujii, Hewlett Packard Company.

The final attendance count for the conference was 19,764. Next year's conference will be held in San Diego, CA.

Siggraph 2006 Exhibition

The Siggraph 2006 Exhibition was a noisy, crowded, busy bundle of activity that appealed to the senses of all attendees. I didn't have a chance to see all the booths, preview all the demos or speak with all the exhibitors, but what I saw only made me want to see more. Below are some of the highlights:

Pixar

Pixar once again handed out designer wind-up teapots and movie posters for its latest work, Cars, which created lines that spanned the show floor. Cardboard tubes branded with the Pixar logo could be seen protruding from attendee's belongings for the rest of the week.

Movie Productions Galore

Many of the key animation production studios had booths featuring their latest production and collecting resumes for future productions. Throughout the hall, you could catch glimpses of many animated features that are currently released or scheduled for release including DNA Production's The Ant Bully, Disney's Meet the Robinsons, PDI's Everyone's Hero, Sony Pictures Imageworks' Monster House and Open Season.

Learning New Skills

Several exhibitors were offering opportunities to learn new skills via sit-down tutorial sessions. Digital-Tutors were present at the Softimage booth giving insights into Softimage XSI. An assortment of drawing tutorials were offered at the Sony booth and Adobe was training on its broad offering of products including After Effects, Photoshop and Illustrator.

3D Controllers

Sandio Technologies showed a 3D mouse with extra buttons that allowed additional movement not available with a traditional mouse. Called the 3DEasy, the unit is priced at $79.99 and offers users of 3D applications better usability and provides gamers an alternative to expensive 3D controllers. A free SDK is available for developers and the mouse ships with plug-in for 20+ popular games.

Image courtesy of Sandio Technology Corp.

For a more professional 3D controllers, 3DConnexion offers the Space Pilot, a robust controller with over 21 speed keys embedded next to the controller and an LCD screen that dynamically labels several generic keys. The Space Pilot includes plug-ins for over 100 different applications making it easy to integrate with your existing software.

Immersive Environment Camera

Immersive Media was showcasing an environmental spherical-shaped camera that included 11 camera lens. The entire camera weighs only 2.5 pounds and can easily be ported about. Useful for tourism and for gaming environments, the camera can capture multiple viewpoints that can be viewed, zoomed and panned. The camera can also be set to simultaneously capture GPS data.

New ZBrush Features

In the Pixelogic booth, several new ZBrush features were being displayed. The release of ZBrush 2.5 is still several months off because "we want to do it right," was the answer from Jamie Labelle, head of Marketing. Among the new features include better import and export options, and the ability to transfer geometry between objects.

Instant Character Creation

A company called Darwin Dimensions showed off an interesting product called Evolver. This software is loaded with human characters (additional characters can be purchased separately) and new characters can be created by combining the attributes of the loaded characters. The resulting character are unique, full-rigged and ready to be used without requiring that the model be built from scratch.

Personal 3D Scanner

NextEngine Inc. was showing its compact Desktop 3D Scanner. Priced at $2,495, the NextEngine scanner is affordable enough to put a 3D scanner on every modeler's desk eliminating bottlenecks scheduling time on the expensive scanning system. The NextEngine scanner uses proprietary MultiStripe Laser Triangulation (MLT) technology and scans objects the size of a shoebox using marker technology, so the object can be repositioned to scan occluded areas.

Image courtesy of NextEngine Inc.

Plant and Tree Modeling Plug-Ins

If your game environments require outdoor scenes featuring plants, trees and vegetation, check out the natFX plug-in from Bionatics. Available as a plug-in for Maya or 3ds Max, this plug-in lets you select from a library of available models and each model can be displayed using low-res billboards or high-res geometry. You can also change the season, add wind effects or include normal maps.

Another method for growing plants is to use the XFrog plug-in created by Greenworks Organic Software. This plug-in which is available for Maya, 3ds Max and Cinema 4D builds leaves, flowers and seeds at a cellular level.

Markerless Motion Capture

Movo was showing off a system that allowed motion capture without markers. The Contour system works by applying phosphorescent makeup to the skin of the performer and to any cloth that you want to capture. The motion is then captured on a stage in front of an array of Kino Flo fluorescent lights that are flashed on and off at 90 to 120 frames per second. The system also includes two sets of cameras for capturing textures. The system has sub-millimeter precision and can capture wrinkles, dimples and skin creases. Since the system is markerless, no clean-up is necessary.

The Act

Although this wasn't E3, one local company called Cecropia was displaying a stand-alone game called The Act that featured fluid animation and a knob for controlling how bold the main character approached his current situation. Reminiscent of Dragon's Lair, the goal was to progress from scene to scene without scaring the girl away or upsetting your boss. You can find more information on the game/kiosk at www.cecropia.com.

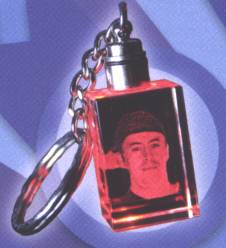

3D Photo-Crystals

Another novelty item was a photo-kiosk that could digitize the occupants face and covert it to a 1 inch 3D cube keychain using lasers. Attendees lined up to pay $10 a pop to walk away with one of these gems.

Emerging Technologies at Siggraph 2006

The Emerging Technologies venue at Siggraph 2006 held some real treasures. I had a chance to walk through the venue with Tom Craven, the Siggraph 2006 Emerging Technologies Chair. The venue held a large variety of different displays including a special area known as the "Fusion Midway" where art and technology were used together.

Front and center in the Emerging Technologies venue was a large array of white balloons connected to computer controlled motors that moved the balloons up and down to create interesting and intriguing patterns. This display was created by Masahiro Nakamura and a team at the University of Tsukuba in Japan. The display was pure eye candy enhanced with lights that changed the colors of the balloons. It provided a fitting welcome to the venue that featured the future of interactive technologies.

The Virtual Humanoid display featured a human-shaped robot draped with green cloth upon which a character's image could be projected. This was intended to enhance VR simulations by providing a physical object that you can interact with. By shaking the robot's hand, the computer senses you actions and transfers these actions to the person at the other end. Providing a physical model to interact with endows the system with the essential tactile feedback to make the interactions more real.

Image courtesy of Michihiko Shoji, NTT DoCoMo and ACM Siggraph 2006

The Forehead Retina System display was developed to help visually impaired people navigate within the real world. The system includes a camera that captures an image of the objects directly in front of the wearer. The captured image is then analyzed and relayed to a set of 512 electrodes mounted within a headband. By understanding these impulses, a visually-impaired person can navigate a room of obstacles unaided.

Image courtesy of Hiroyuki Kajimoto, University of Tokyo and ACM Siggraph 2006

The Virtual Open Heart Surgery display featured some work that is currently being used in Denmark. It allows doctors to train completing open heart surgery in a non-evasive manner. It also allows the MRI data from surgery patients to be loaded into the simulator so a doctor can practice on the exact conditions that they will face in the upcoming surgery.

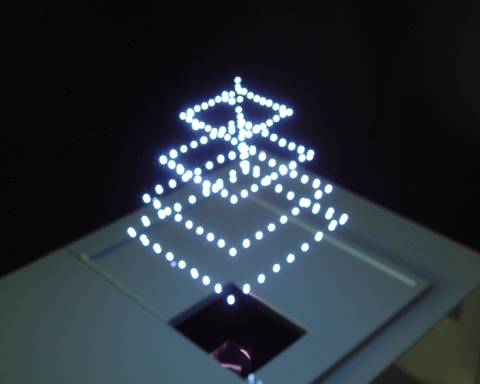

The noisiest display in the venue was the 3D Laser Display. By crossing two laser beams within a controlled area, a bright dot of light (and its accompany crackle) appear. Using computers to control the precise position of each laser, the team created a series of simple shapes that moved about the area. This could easily be the precursor to true-3D holographic displays.

Image courtesy of Hidei Kimura, Burton, Inc. and ACM Siggraph 2006

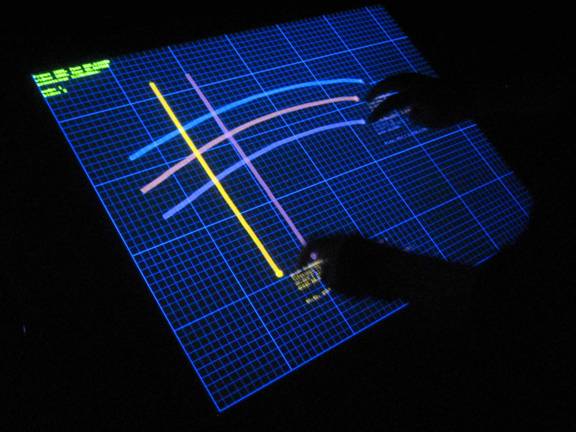

The Touch Screen Wall display featured a large 16 ft. display upon which a projected series of images where displayed. The wall allowed multiple users to simultaneously interact with the images providing an excellent interface for collaborative work.

Image courtesy of Jefferson Han, Courant Institute of Mathematical Sciences and ACM Siggraph 2006

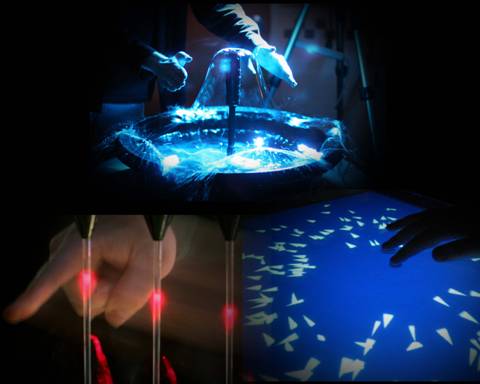

The Mitsubishi Electric Research Lab (MERL) featured three unique demonstrations that used water. The water harp let users create music by interrupting continuous flows of water descending between two sensors. The second display featured a display screen under a large square dish of water. As the water is disturbed, the image displayed fish fleeing away from disturbed area. The third display featured a fountain of water that could be altered by moving one's hands closer or further from the fountain. This worked by changing the capacity of the electric system.

Image courtesy of Paul Dietz, MERL and ACM Siggraph 2006

The Powered Shoes display let users of a VR system move through a world by walking on shoes with wheels that stay in the same place. By simulating the actual movement of walking, the simulation is enhanced.

Of the several different haptics displays, two were notable. A team from the Tokyo Institute of Technology developed a system they called the "Powder Screen," which included a pool filled with polystryrene beads. This system was demonstrated with a fishing haptic device that allowed users to fish in a virtual stream and experience the thrill of struggling to land a fish.

Image courtesy of Masayoshi Ohuchi, Tokyo Institute of

Technology and ACM Siggraph 2006

The second notable haptic display was titled, Perceptual Attraction Force. It used a force-feedback system to simulate accelerations and an increase in weight. The device was designed to be mobile and could be used outdoors.

In the Fusion Midway area, the Digiwall display featured a rock climbing wall with several hand grips. Each of these grips could be lightened and sensors indicate when each grip is grabbed. Using these sensors, the team created several games that could be played such as having a player climb on the wall until a lighted grip is touched and then another grip would be lighted.

Image courtesy of Mats Lijedahl, The Interactive Institute and

ACM Siggraph 2006

Autodesk Users meet at Siggraph 2006

Autodesk users gathered at Siggraph 2006 to see Autodesk's latest developments showcased. The meeting started with a demo team that showed the entire pipeline for completing an animation of a frog at the carnival romancing his girlfriend. The initial sketches were completed in Sketchbook. These sketches were then pulled directly into Max where the 3D modeling was complete. The assets were then transferred to MotionBuilder where the shots were blocked and animated. Using the FBX format, the assets were returned to Maya where they were rendered. The rendered shots were finally composited in Combustion. The demo showed the strength of the Autodesk tools that encompass the entire production pipeline.

Autodesk Masters Awarded

Six individuals were recognized as Autodesk Masters for the contributions to the industry and to the Autodesk community. The award recipients were Bobo Petrov, Paul Thuriot, Lon Grohs, T.J. Galda, Neil Blevins, and Chris White.

Autodesk Clients Show-Off

Several Autodesk customers were invited on stage to show off their current success produced with Autodesk tools. Tim Naylor from Industrial Light and Magic showed how Davy Jones was created for the movie, Pirates of the Caribbean, Dead Man's Chest. Of particular, Tim showed how Autodesk tools were used to create the tentacles of Davy Jones' beard. Tim also showed some humorous takes where the pirates were swordfighting with light sabers and where the rolling water wheel was traded out for a Krispy Kreme doughnut.

Chris Bond and Chris Harvey of Frantic Films showed how the Autodesk products were used to create digital effects for the movie, Superman Returns. Some of the waterfront effects included systems with over 1 billion particles per frame.

Stu Maschwitz of the Orphanage humorously observed that now that Autodesk owns Max and Maya, "what else would you use."

Didier Madok of GMJ, an architectural design firm, concluded the client demonstrations by showing off a 36 square kilometer of London that was modeled in Max using 12 million polygons. Despite the size of the dataset, a 64-bit version of 3ds Max was still able to display and work with this enormous scene.

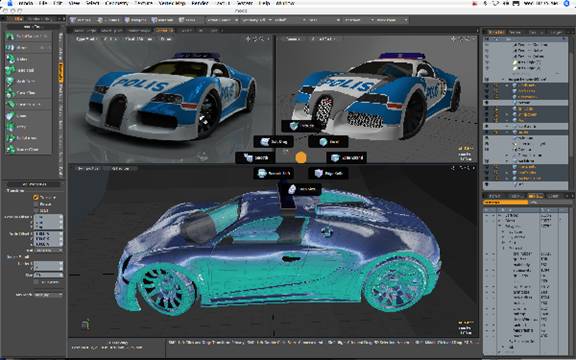

New 3ds Max 9 Features

The Autodesk meeting then changed gears and showed off the new features included in the soon to be released 3ds Max 9. This release is focused on speed, pipeline and performance to address the feedback from Max users. This release also includes mental ray 3.5 and an implementation that makes it easier to use. Users complained that mental ray is great, but it was hard to figure out all the various settings. Users can now specify a mental ray rendering with only 3 clicks. Max 9 also includes a new car paint shader and a set of architecture materials with Ambient Occlusion.

The biggest news with Max 9 is the inclusion of a 64-bit version of the application. This will enable users to display and manipulate large datasets. The new Point Cache 2 modifier lets users bake animation keys into a model and can be seamlessly transported to Maya. The demo of this modifier showed a scene with 3 million animated polygons that displayed without a hitch. The FBX format has also been updated allowing better integration with MotionBuilder.

Maya 8 Available Now

The new features in Maya 8 were also shown at the meeting along with an announcement that Platinum Subscription members can download Maya 8 today. Like Max 9, Maya 8 development was focused on improving performance and efficiency including a 64-bit version. Maya 8 has scalable threading.

Other new features include the ability to transfer attributes between objects. The window view can be set to view the scene using Direct 3D enabling users to view their scene just as it would be seen within the game engine. Maya 8 also features better interoperatability with Toxic.

New Project Portal Announced

Autodesk announced a new project portal that is based off the Maya community portal and expanded to include all Autodesk products. The portal named Area will go live on August 22.

Autodesk Previews New Technologies

To conclude the meeting, Autodesk took a moment to show off some of its own research. The Gepetto system combines and analyzes in real-time multiple animation clips to produce a resulting animation that is smooth and fluid. This will allow directors to control the motions of a character without having to regenerate keyframes.

The Maya research team showed off a unified solver that is extensible for dynamic motions that behave realistically.

Luxology Interview

On the last day of the conference, I had a chance to sit down with Brad Peebler and Bob Bennett of Luxology to see the improvements and latest developments to their flagship modo product. Modo is a 3D modeling and rendering package that is shaking up the industry with its smooth, elegant interface, cutting-edge modeling tools and lighting fast rendering. When commenting on the interface, Bob Bennett was quick to respond, "Details matter," which sums up modo succinctly.

Image courtesy of Martin

Almstrom and Luxology

Developed by Brad Peebler, Allen Hastings and Stuart Ferguson, the key people behind Lightwave, modo represents a fresh start that is built from the ground-up with the end user in mind. Created after the product feature wars that add unnecessary baggage to many 3D packages, modo is coming on strong as a efficient alternate.

Modo 101 was released in September 2004 with a core set of modeling features and was quickly followed by versions 102 and 103. Modo 201 was released earlier this year adding in rendering, painting, instancing and mesh paint features. The 201 release also included the ability to customize the interface and mouse buttons and a simple macro recording feature similar to Photoshop's actions. Luxology also released a Linux version about the same time.

Modo 202 was released during the Siggraph 2006 conference and boasts a 40% increase in speed over modo 201. Among the new 202 features are the ability to see where UV maps overlap and UV pinning, an object-to-object baking features that lets you transfer surface details between objects, the ability to work in game units, an image ink feature, FBX improvements, and real-time normal map editing.

Image courtesy of Paul Hammel

and Luxology

When asked about this insane development and release cycle, Brad explained the development platform that they are using at Luxology is based on a system they've established named Nexus. This platform uses the process of application baking by defining features in a high-level XML definition. This allows them to release a version of the software while development on the next version continues. It appears that the modo development team isn't taking any time to rest.

Image courtesy of Ahmed Alireza

and Luxology

Several gaming companies have selected to use modo for the creation of their game assets including id software, Epic, Digital Extremes, and Vivendi.

Luxology offers a free 30-day full-featured evaluation version of modo on their web site at www.modo3d.com.

NaturalMotion Interview

I had a chance to meet with Torsten Riel and Simon Mack of NaturalMotion aboard the Lady Christine, a yacht they anchored in Boston Harbor during the show. Although it was more interesting than the standard hotel suite, I'm thankful I didn't get seasick during the demos.

When first asked if I knew anything about the company, I responded that I thought NaturalMotion did something with motion capture, but I was quickly corrected. NaturalMotion deals with Dynamic Motion Synthesis (DMS). DMS is simulated using behaviors that imitate the human nervous system. A good example of this motion occurs when a character is pushed backwards. Traditional key frame animations have the character simply fall and hit the ground, but a DMS simulation would show the character struggling to maintain their balance and when toppled, the character would reach its hand backwards to break the fall as a human naturally would. If the motion were recorded using an expensive motion capture system, the falling motion would be recorded precisely, but if the environment changed such as adding a block behind the character, another motion capture would be required, but the DMS system could easily simulate the new environment using the given behaviors.

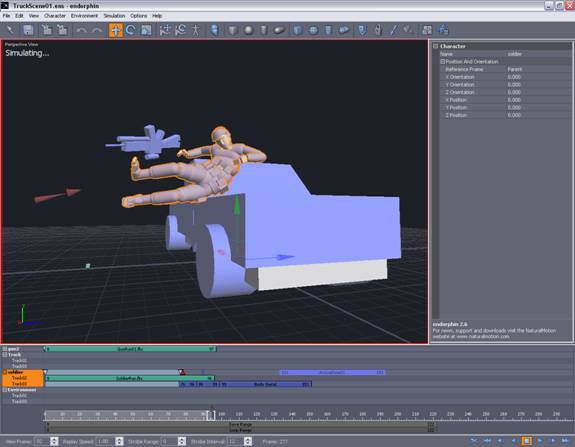

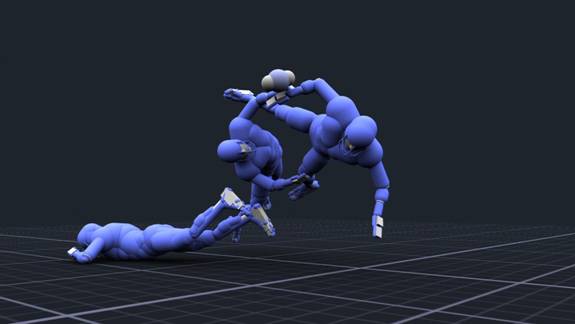

The DMS technology has been integrated into three unique products—Endorphin, a stand-alone application for creating and capturing DMS motions; Euphoria, a runtime version of the technology optimized for next-gen games; and Morpheme, a set of tools for enabling animators to define the realistic motion of characters. With these products, you can add character motions that are more realistic that any other dynamic system I've seen.

Endorphin

The first product developed and released by NaturalMotion was Endorphin. Version 2.6 of this product was released earlier this year at GDC. Endorphin lets you define behaviors and then lets you view the results. The characters use the defined behaviors to accomplish their task whether it is to tackle the other character, remain standing on a moving platform, or fight with another character.

Using blending controls, you can move easily between keyframed animation and simulated motion. You can also constrain certain body parts during the simulation giving you unprecedented control over the motion of your character. The resulting motions can then be converted to key frames and exported to several 3D formats including 3ds Max, Biovision, FBX, Softimage and Vicon. You can also export motions directly to AVI video from Endorphin.

A Learning Version of Endorphin is available that has over 25,000 users. The Learning Edition is limited to exporting video data only. You can download the Learning Edition at www.NaturalMotion.com.

Endorphin was recently used by Giant Killer Robots to create several digital shots for the movie, Poseidon. Rather than using complex keyframing or dangerous motion capture, the studio simulated several stunt shots that occur when the boat capsizes using digital characters and NaturalMotion's Endorphin.

Endorphin has also been used by numerous game studios to create motions for games including Medal of Honor: European Assault and Colin McRae 3.

Euphoria

The technology behind Endorphin has been included in their Euphoria product that enables dynamic motion synthesis for real-time next-gen game consoles including XBOX360, Playstation 3 and PC. This technology enables games to have "unique game moments," by embedding the NaturalMotion technology within the real-time game engine. This enables characters hit with a deadly blow to fall realistically based on the environment surroundings instead of a canned animation that gamers are quick to recognize and grow tired of.

Euphoria includes a simple messaging API that ties it to the console's game engine. It also includes a feedback loop for programming control and behaviors can be overridden as needed. Euphoria will be used by LucasArts in their upcoming Indiana Jones 2007 title.

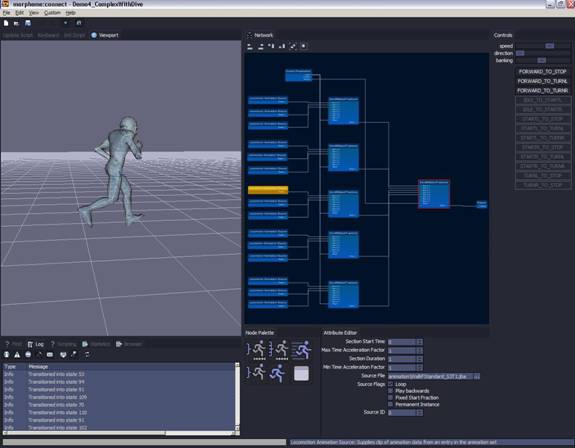

Morpheme

NaturalMotion's third product was and announced and released during the show. Morpheme is a set of animation tools that gives animators a way to define and work with blend curves and state machines. It is specifically designed to work with next-generation games. By enabling animators a way to define blend curves and animation logic, next-gen characters can be endowed with realistic motions that are unique every time.

Morpheme consists of two components. The morpheme:runtime component is a run-time game engine optimized for the various consoles and the morpheme:connect component is an authoring tool that allows animators to build and edit state machines and view the results in real-time. The components also include hooks to tie into the other 3D animation packages and existing physics engines such as Aegis and Havok.

Morpheme will be available in October for Playstation 3, XBOX360 and PC systems.

More information on each of these products and on NaturalMotion can be found at the company's web site, www.naturalmotion.com.

Discuss this article in the forums

Date this article was posted to GameDev.net: 8/26/2006

(Note that this date does not necessarily correspond to the date the article was written)

See Also:

Event Coverage

Event Coverage

© 1999-2011 Gamedev.net. All rights reserved. Terms of Use Privacy Policy

Comments? Questions? Feedback? Click here!